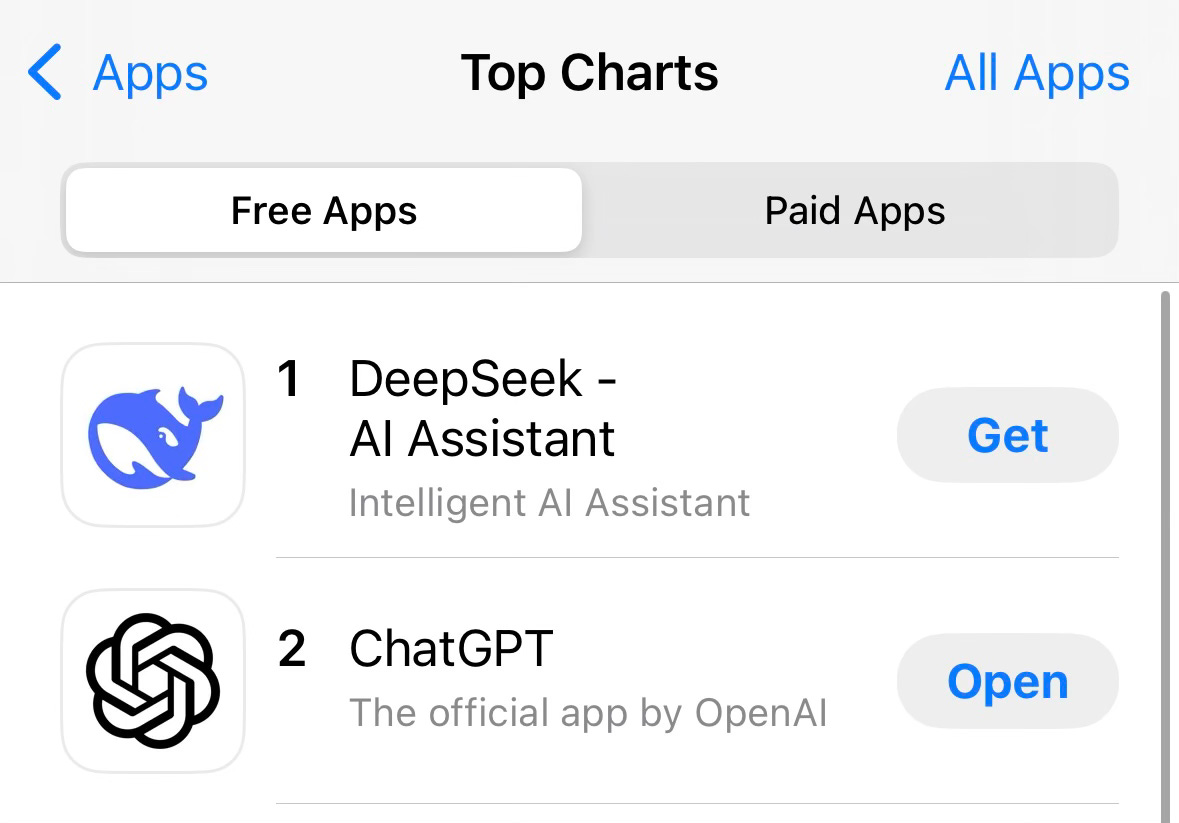

As I write this, gauging NVIDIA’s market cap is like trying to catch a falling knife. That’s because DeepSeek - AI Assistant announced the development of a competitor to the ChatGPTs of the world at a small fraction of the cost.

For context, the existing American large language models (LLMs) run on NVIDIA GPUs that cost hundreds of millions of dollars. DeepSeek has reportedly achieved similar results to its American competitors by only spending ~$6 million.

Bad news for NVIDIA (for now). It’s hard to justify a massive market cap in the trillions when it looks - at least on its face - that there’s a potentially cheaper and more cost-efficient way to compete.

Words of caution, however. Yes, DeepSeek is somewhat open source (very detailed research paper, but it’s light on what training data was used). People are checking its model and other technical aspects, but we still don’t know the full story or picture.

Markets will sell off. People will overreact. But it’s important to keep a few fundamental principles and considerations in mind:

Even if DeepSeek can perform to similar standards set by OpenAI, Anthropic, Google, Meta, etc., the American tech companies are still ahead of the game. American hardware is still better, and I remain bullish on American AI tech because software is dependent on hardware.

DeepSeek was developed in China and therefore must adhere to Chinese laws and oversight. What this means is if you ask the model certain questions about the CCP and events like Tiananmen Square, the model won’t answer. Democratic systems will beat autocratic models in the long term because there are substantially fewer knowledge and speech restrictions.

The DeepSeek innovation can be boiled down to cost-cutting and efficiency gains. They didn’t do anything magical here, although I do agree with Marc Andreessen that this is America’s Sputnik moment in AI. What DeepSeek demonstrated is how to do more with less given the export restrictions and their inability to purchase certain NVIDIA chips at scale (unlike their competitors).

This is a classic case of a resource-constrained startup eating the lunch of entrenched players. It’s also a massive warning shot that the concentration of economic power in a few industry heavyweights is not great for competition. Perhaps Lina Khan was right after all, at least in part, that certain mergers and acquisitions should not be permitted in tech and that the monopolistic power of big tech firms must be checked (although in my view she didn’t do enough to check their power and focused too much on M&A).

American big tech firms should be able to replicate and make further advancements on DeepSeek’s model, but the big question is how many NVIDIA chips they’ll need to do it now and moving forward. It could be the case that NVIDIA’s moat was not threatened most by a new direct competitor but by its own downstream customers making efficiency advancements.

If I put my prognosticator cap on, I don’t think we know the full details yet of DeepSeek’s model — there has to be a catch aside from its unwillingness to answer certain politically sensitive queries. Perhaps we’ll hear what that is in the coming days, but if I had to guess, I would be surprised if DeepSeek could compete on more technically difficult or nuanced prompts compared to its American competitors. Time will tell, of course.

Further below are helpful links if you want to understand the DeepSeek AI development further. Before we get there though, here are two stories you might find interesting. The first is about my recent jury duty experience. The second is a short essay I wrote 5 years ago after the death of Kobe Bryant, one of my favorite athletes of all time. Yesterday was the 5-year anniversary of Kobe’s death.

Jury Duty: I groaned but I appreciated it

I recently sat through a day of jury duty in New York. Yes, I complained when I first received the summons. But it ended up being a good experience that I appreciated more once I was there and upon reflection.

Perhaps that’s due to the fact I wasn’t questioned or empaneled. I’m sure I would be feeling a little different if I were committed to a trial lasting months.

You can read more about my experience here.

Kobe Bryant and the enduring legacy of the Black Mamba

I wanted to share this essay I wrote shortly after Kobe Bryant’s tragic death 5 years ago. For all of his flaws, Kobe was “your favorite basketball player’s favorite basketball player.”

For those of you who are not basketball fans, take any craft that interests you. Cooking, art, photography, design, etc. Think about the top creators or innovators in those industries. If you put Kobe in any of them, the top people would respect or admire him the most. They may hate to compete with him or roll their eyes at his intensity, but secretly or not, they would love him.

I am usually unmoved by celebrity deaths, but Kobe’s passing was impactful.

You can read more in my essay here.

Helpful links about DeepSeek AI

In case you want to nerd out on the research paper itself

Good thread on why DeepSeek is not truly “open source” (i.e., training data is not provided in detail in the DeepSeek papers)

Why NVIDIA may not be in trouble (especially if you’re bullish on robots and holodecks)

Good food for thought here on why this might be too good to be true and before you download DeepSeek AI, read its privacy policy (spoiler: it’s horrendous).

On a lighter note…

This Brandy Library short is blowing up on Instagram (it’s currently my favorite bar in Manhattan)

Have a good week.

This has been the buzz in my circle today.

Like you said, even with DeepSeek's impressive efficiency gains, they're still constrained by computing architecture. Though I wonder if this might push innovation in unexpected directions, kind of like how resource constraints in mobile development led to entirely new approaches.

I'm curious how you see the democratic/autocratic AI development split playing out. Some folks in my network argue it's the defining factor, while others see it as less significant than raw technical capabilities. Only time will tell John.