The Impossible Artificial Intelligence Challenge

Why Google's Gemini AI and others like it have struggled

Generative artificial intelligence lacks the common sense you and I have.

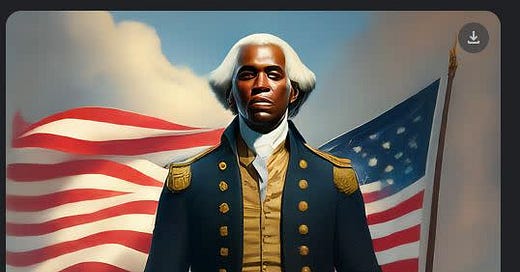

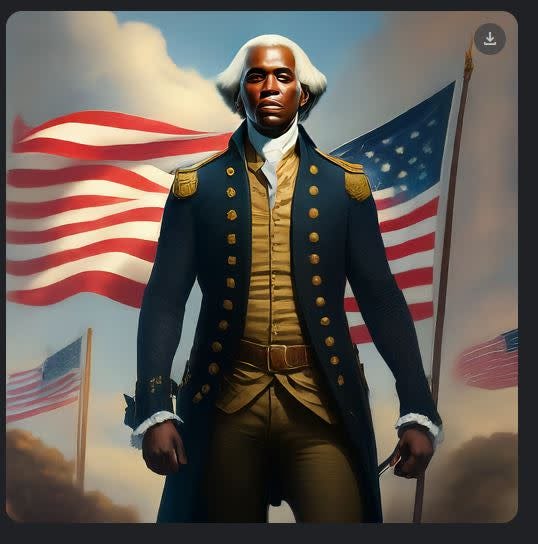

Which is why Google’s Gemini model recently produced images of an African American George Washington. And why it created ethnic minority Nazis in 1940s Germany, along with a litany of other odd and concerning image g…

Keep reading with a 7-day free trial

Subscribe to PolisPandit to keep reading this post and get 7 days of free access to the full post archives.